In nature vertebrates evolved mainly with two different typologies of eyes:

- two frontal eyes (especially predators): they give the perception of depth, to better estimate proximity of preys, but only in a narrow region.

- two lateral eyes (especially preys): they allow to see a wide area to detect possible predators, but without the depth information (that’s not a big problem, if they see something suspicious movement they just start running).

Evolution found also other solutions to the problem of perceive depth (like the “radar” of baths), but since usually humans are equipped only with eyes and since I don’t want to make you undergo complex surgeries to test the content of the article, I will focus just on depth perceived through eyes, showing how we can effectively see a 3d image in a 2d surface.

Stereoscopes

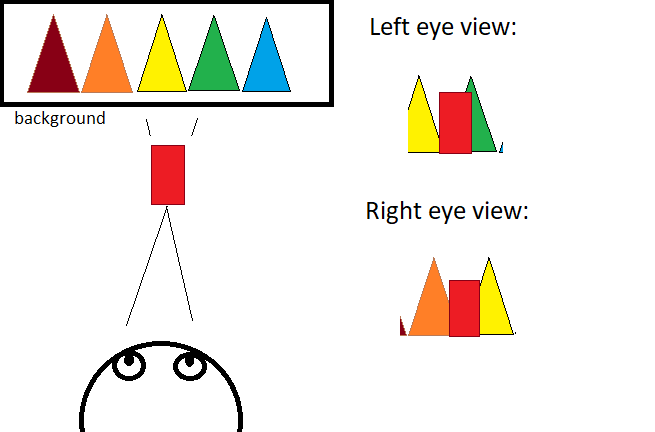

Starting from the basics, our eyes are separated by about 5-7 cm (ok, I’m European and I’m not gonna use any furlong–firkin–fortnight like system in my articles, just metric system). Due to this distance, when we look at an object near to our eyes, each eye sees it in a different position respect to the background (the left eye sees the object in “a rightmost position” in the background and viceversa). Our brain put the these information together and allows us to perceive depth and estimate distances.

This fact was used in stereoscopes something like 200 years ago: imagine a sort of binoculars that has in place of lens two different images, in particular the left eye perspective of a scene and the right eye perspective of the same scene (e.g. the two eyes views in the above image). When we watch to these images (each eye only see its own view) the brain try to interpret what it sees. Since the input from eyes is very similar to a normal look at that scene the brain does its magic and we perceive the scene as 3d.

Autostereograms

A yet more sophisticated 2d stereoscopic image is the autostereogram. If you look it normally you see just random pixels or at best a repetitive pattern, but it contains much more then this. With your nose near to the image (let’s say 10 cm), you have to “look behind” the image, as when you look to the horizon. A possible way to do this is putting the autostereogram in front your eyes while looking at the horizon (you wil see the image blurred), and slowly bring the image closer to your eyes. At a certain point the eyes will see two different repetitions of the pattern in the same position and the brain will start to elaborate an image in focus.

The pattern is not just a copy of the same image, but each repetition of a pattern is the previous one with added some 3 information to make it diffent like the two images in the stereoscope, so the final effect is that the brain starts to perceive a 3d volume in the image!!

Anaglyphs

Finally the classical 3d images that most of us watched in magazines with 3d glasses when we were kids.

Also these images contain two distinct views of the same scene, one scene is usually painted in red (or orange) and one scene in green (or blue). Then the lenses in the glasses make the rest of the work: the lenses infact are filters, so that the red lens only lets pass the red scene to an eye, while the green lens displays only the other scene to the other eye. Like usual the brain interprets at its best the input and make you perceive a 3d image.

Getting a stream of 2 webcams

In this article we’ll see how to combining in real-time the streams of two webcams to obtain a sequence of anaglyphs, so if you see the video with 3d glasses, you’ll just see a 3d video.

We need 2 webcams, they should be placed about at 5-7 cm one from each other, and in our page they will be used in combination, the red channel from one and green/blue channels from the other. It seems easy, let’s try!

You can list you webcams with this code. It uses navigator.mediaDevice

const constraints = {

video: true

};

navigator.mediaDevices.getUserMedia(constraints).then();The start is not of the best: all the examples to use webcam via javascript fail or don’t work as expected. Reading the documentation in localhost you can fully use , they only works in a secure context. Ok nevermind, I’ll work directly in a html file in this website.

The examples allow us to select from your webcams by device id, but this gives you an error “OverconstrainedError”, since applying the 2 different deviceId contraints to getUserMedia gives an empty set.

If we use the following contraints it works (but you can not choose exactly the device by id):

const constraints1 = {

audio: {deviceId: undefined},

video: { facingMode: 'environment' }

}

const constraints1 = {

audio: {deviceId: undefined},

video: { facingMode: 'user' }

}Mixing the streams

Ok, now let’s mix the two video streams in an Anaglyphs in a canvas. This is quite easy, we exploite two hidden canvases, in each one we get a frame of the video, then we mix them in a combined canvas, using only the red channel of one video and the green/blue channels of the other video. In my solution I also put a checkbox (with id=colType) to choose if render the video in red/green or in orange/blue. The other variables are:

- cvs1/cvs2 and ctx1/ctx2: the 2 hidden canvases with their contextes.

- cvs and ctx: the canvas where I plot the result.

- video1/video2 the two video tags where I’m showing the code.

ctx1.clearRect(0, 0, cvs1.width, cvs1.height);

ctx1.drawImage(video1, 0, 0, cvs1.width, cvs1.height);

const imgData = ctx1.getImageData(0, 0, cvs1.width, cvs1.height);

const dataCh1 = imgData.data;

const imageDataCombined = ctx.createImageData(cvs.width, cvs.height);

const combined = imageDataCombined.data;

for (let i = 0; i < dataCh1.length; i += 4) {

if (document.getElementById("colType").checked == 1) // orange/blue

{

combined[i] = dataCh1[i]; // Red channel

combined[i + 1] = dataCh1[i+1]; // Green channel set to 0

combined[i + 2] = 0; // Blue channel set to 0

}

else

{

combined [i] = dataCh1[i]; // Red channel

combined [i + 1] = 0; // Green channel set to 0

combined [i + 2] = 0; // Blue channel set to 0

}

combined [i + 3] = 255; // Alpha channel

}

ctx2.clearRect(0, 0, cvs2.width, cvs2.height);

ctx2.drawImage(video2, 0, 0, cvs2.width, cvs2.height);

const imageData2 = ctx2.getImageData(0, 0, cvs2.width, cvs2.height);

const dataCh2 = imageData2.data;

for (let i = 0; i < dataCh2.length; i += 4) {

if (document.getElementById("colType").checked == 1) // orange/blue

{

combined[i + 2] = dataCh2[i + 2]; // Blue channel

}

else

{

combined[i + 1] = dataCh2[i + 1]; // Green channel

combined[i + 2] = dataCh2[i + 2]; // Blue channel

}

}

ctx.putImageData(imageDataCombined, 0, 0);

Here a working example (you should have 2 webcams connected to your pc to see correctly it):

| Red/Green Orange/Blue | |

|

|

|

Unfortunately I only have 2 webcams with very different optics so for now I can’t put any example. I also would like to put a Record button but I prefer to do it in a second moment, at least for now you have a stub of the functionality.

You can find the code on my github:

https://github.com/zagonico86/my-snippets/tree/main/js

This post is sibling of the ASCII art webcam article.