Recently I have been invited to talk about history of AI and its current state of the art at UNIPOP, the “People’s University of Povegliano”, so I had the chance to delve into the origins and early stages of AI, which I had previously somewhat dismissed, despite having studied its developments from the 1980s onward in some detail about 15 years ago at the University.

What I enjoyed most about the evening was the generational exchange — a dialogue between myself, a Millennial who studied and followed step by step the whole evolution of the modern part of informatics (the first powerful PCs, Internet, search engines, peer-to-peer networks, the mobile revolution and the AI revolution), and an audience 20 to 30 years my senior, more attached to the first era of the informatics, and interested but worried by the modern developments. Our different perspectives and concerns revealed not only when each of us first encountered technology, but also how deeply it shapes our lives today.

I publish the material produced for that talk as posts in this blog, hoping it can be useful also for someone else. This first post is more historical, then there may be one or two other posts more theoretical, trying to explain how AI works.

What is Artificial Intelligence?

Artificial Intelligence (AI) is a technology that enables computers to simulate certain human abilities, such as:

- translating or generating text

- recognizing or creating images

- solving problems and making decisions

It is important to understand in what it differs from traditional programs: traditional programs follow predefined instructions and behave in a predictable way. AI, on the other hand, learns from data and improves its responses over time. For the moment we don’t explain how this is possible, we’ll show it in a following post talking about neural networks.

The Third Industrial Revolution

At school, we usually learned about the First and Second Industrial Revolutions:

- First Industrial Revolution (1760–1840): it began in the United Kingdom and was made possible by a surge in available labor, partly due to the mechanization of agriculture. This revolution introduced mechanical looms and steam-powered machinery, radically transforming textile production and factory work.

- Second Industrial Revolution (1860–1914): marked by the expansion of industrialization into new fields such as electricity, chemistry, and petroleum-based energy. It led to innovations like the internal combustion engine, electric lighting, and mass production techniques (e.g. assembly lines). This phase fueled rapid urbanization and technological progress.

However, what is less commonly taught is that we are now living in the midst of a Third Industrial Revolution, driven by technologies that are reshaping the world around us!

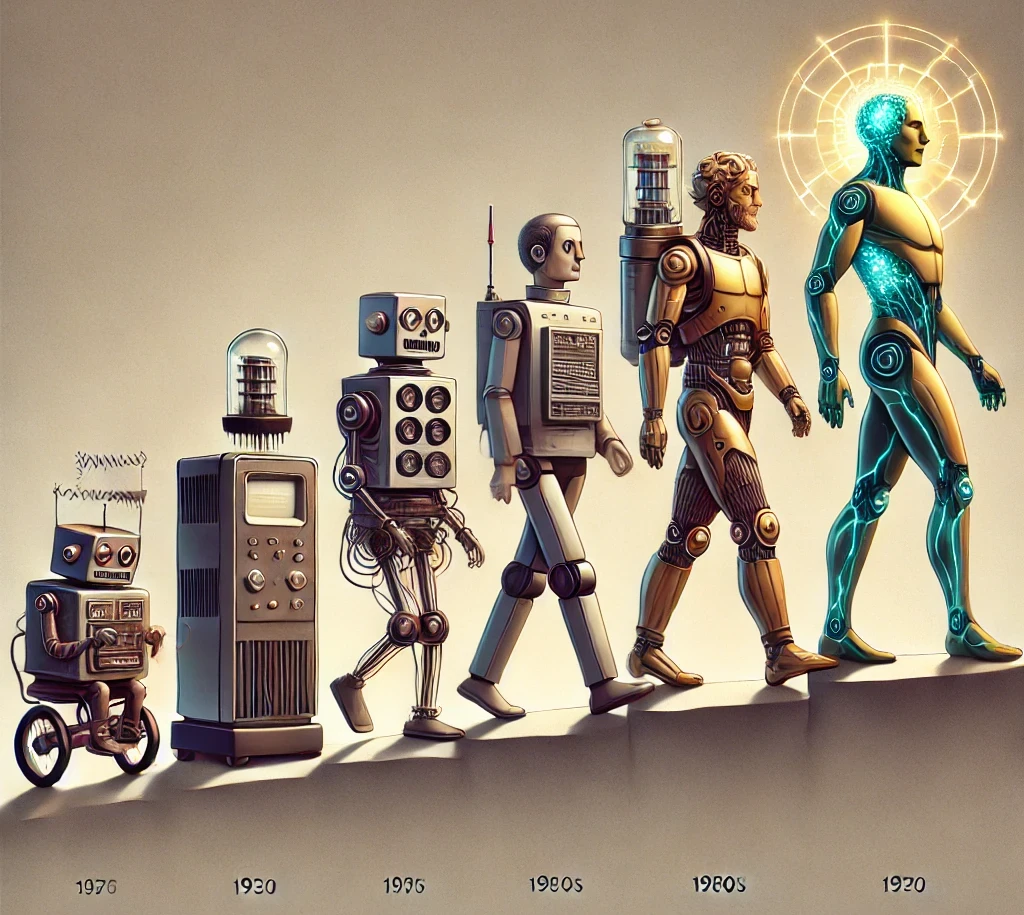

- Third Industrial Revolution (1970-present): often called the Digital Revolution, it began with the rise of electronics, computing, and telecommunications. Today, it includes Artificial Intelligence, automation, robotics, and data-driven technologies that influence almost every aspect of our lives — from how we work and learn, to how we communicate and consume information.

Between second and third industrial revolutions

The roots of AI can be traced back to the massive technological development that followed the Second Industrial Revolution, which also shaped the nature of the two world wars. Let’s recall that World War I was drastically different from previous wars because it was a mass-scale conflict: mass production of weapons, mass mobilization of soldiers, and industrial-scale logistics.

Moreover, the rapid advancement in communication systems, transportation, and early computing between 1920 and 1940 meant that by the time World War II began, warfare had evolved into something that, in many aspects, resembled modern warfare — highly technological, fast, and heavily industrialized.

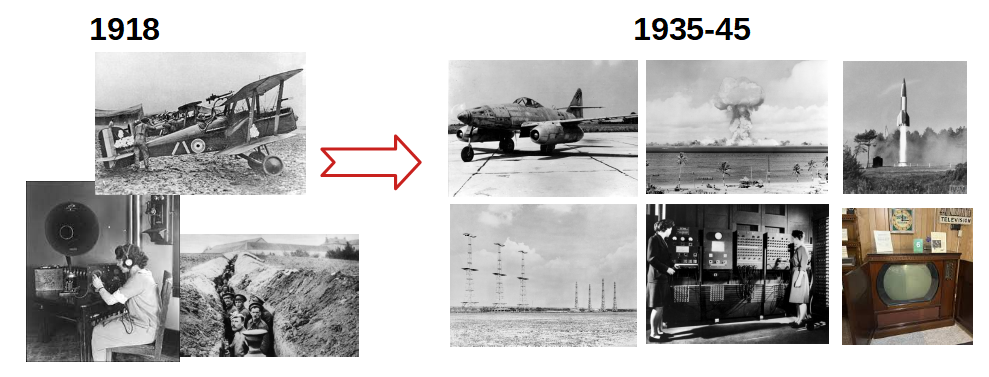

We close this section with this image, which highlights how drastically the world changed in just twenty years. In 1918, World War I was still fought with rifles in trenches, and relied on the early ancestors of modern technology — propeller aircraft, wired radio systems, basic field telephony.

By 1935–1945, warfare had evolved into a radically different form: jet aircraft, ballistic missiles, nuclear bombs. Meanwhile, radar systems, electronic computers, and television were emerging, marking massive leaps in communication, control, and information processing.

These rapid developments happened across separate branches of science and technology — physics, engineering, mathematics, electronics, and computing — which, by converging in the following years, would lead to the birth of Artificial Intelligence, as we’ll explore in the next section.

An out of topic personal thought: before 1920 we barely

Matematics and logics studies

Before the birth of AI, scholars were trying to define what Matematics can prove and what it can not prove, with the key result of Gödel’s Incompleteness Theorem. In that years the first programmable electronical computers were emerging , so there were similar studies about what computers can and can not compute, supported by the famous Turing Machine model.

Key milestones in this path:

- 1854 – Binary algebra

George Boole introduces Boolean algebra, showing that logical reasoning can be expressed using binary values (true/false). - 1879 – Formal logic

Gottlob Frege develops predicate logic, a formal system to express logical statements and their relationships. - 1900–1931 – Formalization of mathematics

David Hilbert launches a program to formalize all of mathematics using a complete, consistent set of axioms and rules. - 1930 – Gödel’s Incompleteness Theorem

Kurt Gödel proves that any sufficiently powerful formal system (one that can express arithmetic) contains true statements that cannot be proven within the system itself. - 1936 – Turing machine and computability

Alan Turing defines the theoretical model of a computer — the Turing Machine — and explores the limits of computation.- He formulates the halting problem: there is no general method to determine whether a given program will eventually stop or run forever.

Sample Turing Machine

We already discussed about the Turing Machine in this article. In order to allow a more concrete understanding of it, we propose and describe a simple Turing Machine that stops when it sees for the first time 3 consecutive 1s on the tape:

States:

- S₀ – Start state.

- S₁ – Found one

1. - S₂ – Found two

1s in a row. - SH – Found three consecutive

1s → halt.

How it works step-by-step:

- From S₀, it moves right until it reads a

1, then transitions to S₁. - In S₁, if it reads another

1, it goes to S₂. If it reads0, it returns to S₀. - In S₂, if it reads a third

1, it moves to the halting state S_H. - If in S₂ it reads a

0, it resets to S₀ and continues scanning. - The machine stops only when it successfully detects

1-1-1in sequence.

Given this example, the reader can imagine more complex examples, like the sum of two numbers, and with some more difficult also the multiplication. With a sufficiently complex state graph we can “build” a general purpose computer.

The Steampunk era (early Mechanical Calculators)

Before the advent of electronics, many scholars tried to simplify the difficulty of doing calculations or programming machines with mechanical devices that are conceptually the predecessors of electronic computers. Here are some of the main steps in this line:

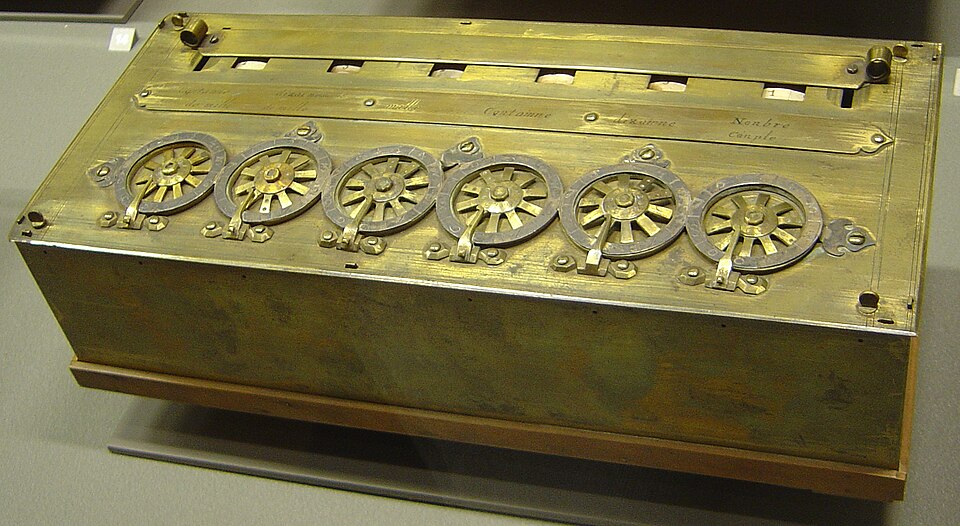

- 1642 – Pascaline

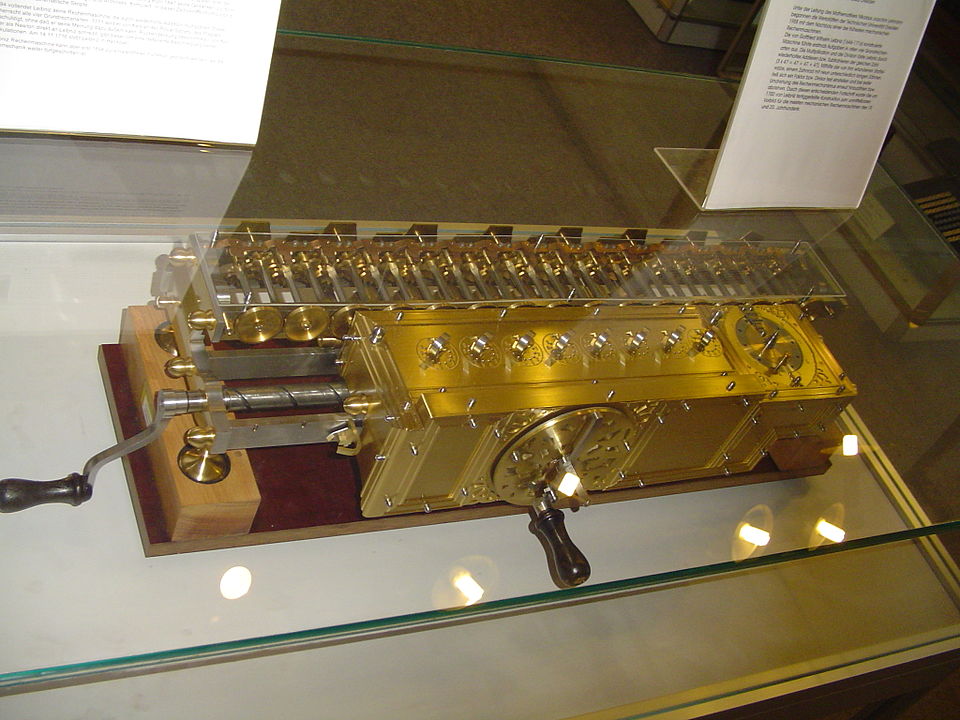

Designed by Blaise Pascal, this mechanical calculator could perform additions and subtractions using a system of gears and dials. - 1673 – Leibniz’s Step Reckoner

An improvement on Pascal’s design, it could also multiply and divide, using a stepped drum mechanism.

Images taken from Wikipedia, Pascaline (David.Monniaux, CC BY-SA 3.0, https://commons.wikimedia.org/w/index.php?curid=186079) and Leibniz Stepped Reckoner (User:Kolossos – recorded by him in de:Technische Sammlungen der Stadt Dresden (with photo permission), CC BY-SA 3.0, https://commons.wikimedia.org/w/index.php?curid=925505).

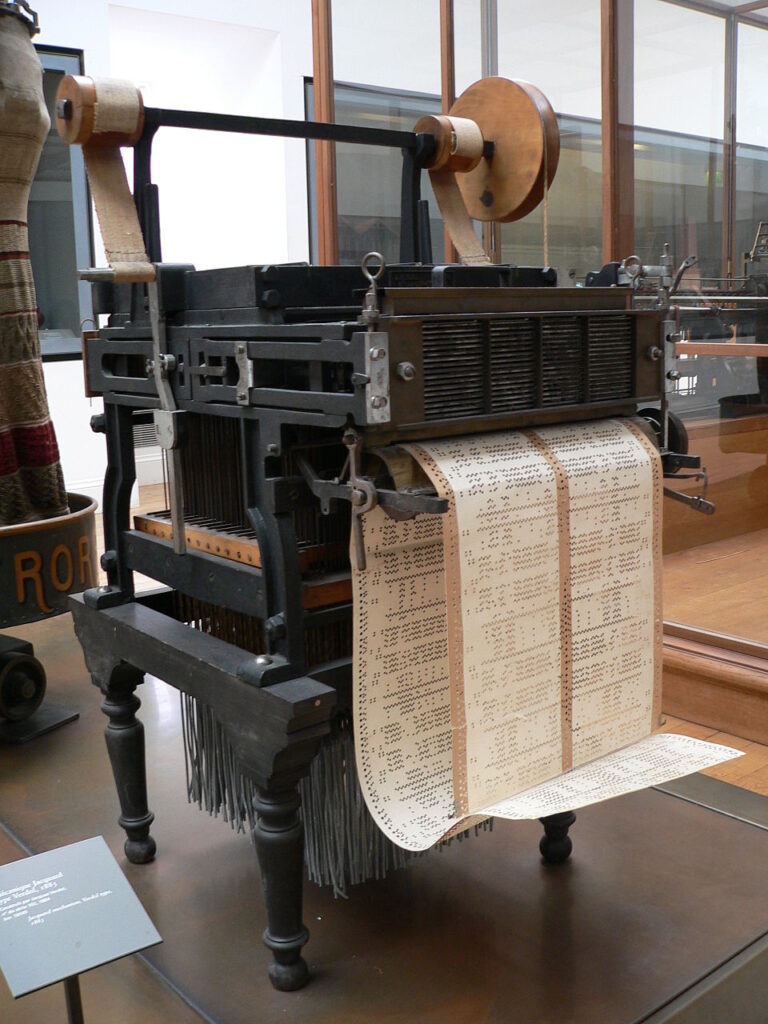

1801 – Jacquard Loom

This weaving machine used punched cards to automate complex textile patterns — a key concept in programmable machines.

1837 – Analytical Engine

Conceived by Charles Babbage and annotated by Ada Lovelace, it was the first design for a general-purpose mechanical computer, complete with memory, control flow, and a basic CPU-like structure.

Mechanical calculators didn’t have a major impact on society, but create interest among scholars and create the idea of automatic computation.

The Dieselpunk era (First electronic computers)

The transition from mechanical computation to electronic computing was marked by a series of foundational breakthroughs in the late 1930s and 1940s.

- 1937 – Claude Shannon demonstrated that Boolean algebra could be implemented using electrical circuits, establishing a direct link between logic and hardware.

- World War II accelerated the development of both electromechanical and electronic computers, as military needs pushed for faster and more reliable calculations.

- 1945 – John von Neumann introduced the architecture that underpins most modern computers, with separate units for memory and processing, and a stored program model.

Several pioneering machines were developed during this period, each representing a key step in the evolution of computing:

- 1939 – Harvard Mark I (USA):

The first large-scale electromechanical computer, built using relays. It was used by the U.S. Navy for ballistic and mathematical calculations. - 1941 – Zuse Z3 (Germany):

The first fully automatic, programmable electromechanical computer. Created by Konrad Zuse in Nazi Germany, it laid the groundwork for digital computing; it was not used operationally, as the Nazi regime failed to recognize its strategic value. - 1943 – Colossus (United Kingdom):

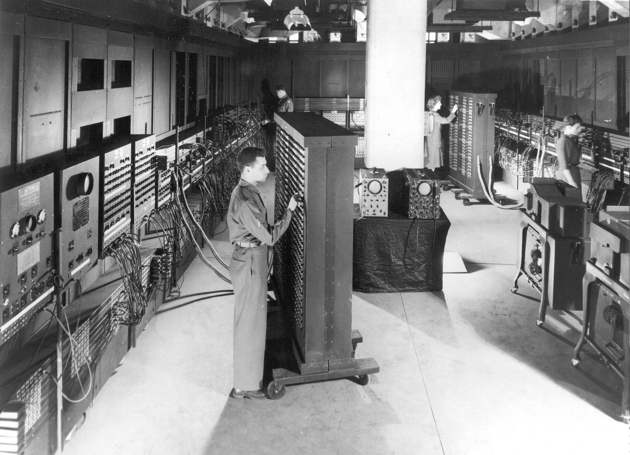

Built at Bletchley Park, it was the world’s first electronic computer using vacuum tubes. It was designed to decrypt messages encoded with the German Enigma and Lorenz machines. - 1944 – ENIAC (USA):

The first general-purpose electronic computer. Developed by the U.S. Army to calculate artillery trajectories, it used 17,000 vacuum tubes and occupied an entire room.

These machines were designed in different countries, often in secrecy, and for different purposes — from code-breaking to ballistics — but they all share a legacy: they represent the birth of modern computing, and laid the technological foundations for the development of Artificial Intelligence.

At that time the previous computers where revolutionary, ten of thousands times faster then a man. Here a comparison against a human, considering 10 digits numbers:

| Human | ENIAC | ||

| 1 sum in 10 seconds | 0.1 Hz | 5000 sums per second | 5000 Hz |

| 1 multiplication per minute | 300 mult per second |

Understanding Natural Intelligence (Biology and Neuroscience)

While mathematics and engineering provided the structure for computing, it was the study of the human brain that inspired the very idea of machines capable of learning. Early discoveries in biology and neuroscience laid the conceptual foundation for artificial neural networks.

Key developments:

- 1890 – Santiago Ramón y Cajal (Spain):

Through microscopic observation and detailed drawings, he demonstrated that the brain is made up of individual cells, called neurons, rather than a single continuous network. This discovery was foundational for modern neuroscience. - 1943 – Warren McCulloch & Walter Pitts (USA):

They proposed the first mathematical model of a neuron, combining ideas from biology and logic. Their model used binary inputs and outputs and inspired the architecture of early neural networks. - 1949 – Donald Hebb (Canada):

Introduced the principle of synaptic learning — “neurons that fire together, wire together.” This idea suggested that learning in the brain occurs by strengthening the connections (synapses) between co-activated neurons, a concept that later influenced learning algorithms in AI.

These early insights into how the brain functions not only advanced neuroscience but also inspired the first attempts to replicate learning in machines — efforts that would evolve, decades later, into deep learning and modern AI.

The Birth of Artificial Intelligence

In previous paragraphs we see that in the century leading up to the 1950s, several scientific disciplines were rapidly evolving. Among them:

- Logic and mathematics

- Electronics and Calculators

- Neuroscience and biology

As these fields matured, an increasing number of researchers began to wonder: what happens when these concepts are put together? Could a machine go beyond calculation and actually think?

One of the first to raise this question was Alan Turing, who had previously explored what a machine could compute. In 1950, he proposed what is now known as the Turing Test (or the “Imitation Game”):

If a machine, through written conversation, can convince a human judge that it is a human, it can be considered “intelligent”.

This shifted the debate from “Can machines calculate?” to the more daring “Can machines think?”

By the mid-1950s, the idea had gained enough traction to lead to a pivotal event: the Dartmouth Conference in 1956, where John McCarthy and colleagues formally coined the term Artificial Intelligence and defined it as a new field of study. Their central question was:

Can we create machines that think like humans?

Conclusions

Well, to be honest, these aren’t really conclusions — we’ve actually just reached the starting point of the topic. But it felt a bit odd to end the post with a paragraph titled “Introduction”.

In the upcoming articles, we’ll explore the early stages of AI and the core ideas behind modern artificial intelligence.

See you next time!